Amplify AI Platform

UNC System's New AI PlatformAmplify

|

Amplify: Generative AI for the UNC System OfficeSecure AI for the UNC System Office |

Beta Launch: AmplifyWe’re excited to launch the Beta version of Amplify to the UNC System organization. With built‑in privacy, guardrails, and secure data storage, Amplify enables responsible AI at scale. Amplify supports the creation of custom AI assistants grounded in departmental documents, making it ideal for specialized workflows. With reusable prompt templates, built-in helpers for generating summaries and reports, and embeddable URLs for integration with organizational websites, Amplify transforms how the UNC System Office approaches innovation and data analysis, all within a secure environment that adheres to Tier 1 and Tier 2 data classification standards. Use SO credentials to login. Security & Governance

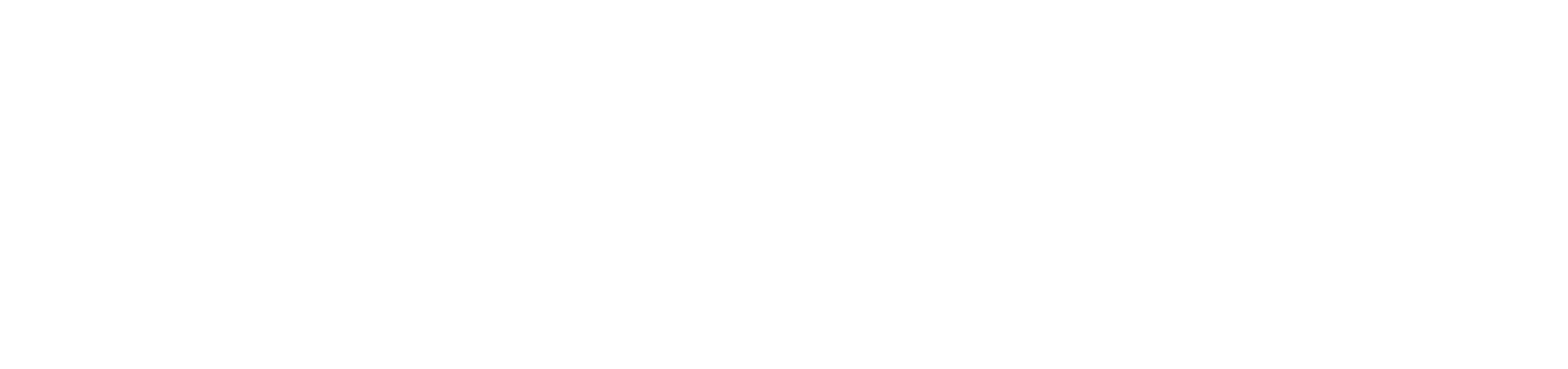

Amplify’s InterfaceInterface Highlights

Conversations

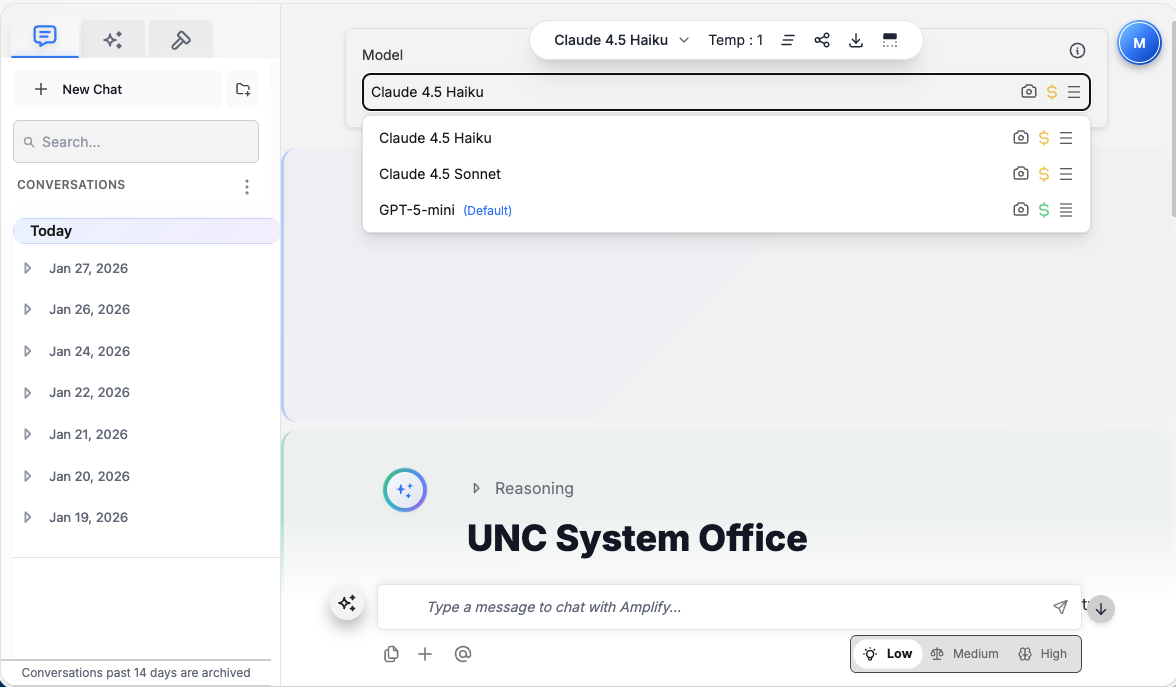

Assistants

Prompt Templates & Helpers

Key Features Summary |

About Our Platform

Amplify is the new AI platform for the System Office. Amplify is an open source platform originally developed by Vanderbilt University and released to the public community. The platform is built in AWS and provides for a secure and responsible platform to interact with a variety of LLMs in one application. The application includes a library of models hosted by AWS Bedrock and has the ability to bring in both OpenAI and Google models for use. Data uploaded and shared in the environment stays in the UNCSO tenant and does not get shared with any of the large language model providers.

Amplify FAQs

Amplify has a send feedback menu option that will open your default email client with the associated support email address. A ticket will be created for review. UNCSO IT will build roadmaps of features and/or bugs to work on either internally or collaboratively with the Amplify open source community to continually build out the feature set based on requests.

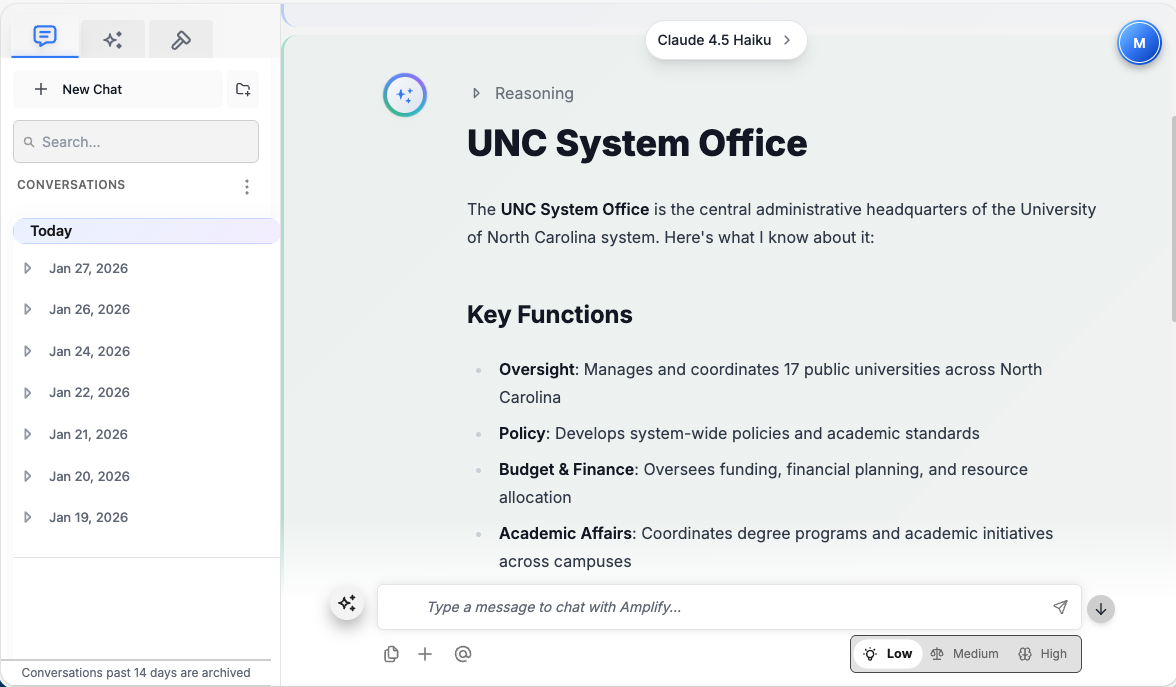

Amplify provides access to a variety of Large Language Models. UNCSO is currently releasing the following models for use. We will add/remove models as more advanced ones become available at acceptable token costs. All models provided by AWS Bedrock will have additional guardrails applied.

- Claude 3.5 Haiku (Default Model)

- Claude 4 Sonnet

- GPT4-mini

- *Model comes from OpenAI directly and does not have gaurdrails applied.

- GPT-4o

- *Model comes from OpenAI directly and does not have gaurdrails applied.

- gpt-oss-120b

- Mistral 8*7B

1. Data Security and Use within Amplify

When you use public generative AI tools, the data you enter is often shared with the company that created the tool and may be used for various purposes, including model training. Amplify, on the other hand, is an internal UNC System Office tool. Our agreements with the AI model providers prohibit them from using our data for model training. This ensures that any information you enter into Amplify remains secure and is not used by any external entities.

The UNC System Office classifies data into three levels of sensitivity. At this time, you may use information classified as Level 1 (Public), Level 2 (Internal), and Level 3B (Regulated) within Amplify. However, protected personally identifiable information (PII) such as SSNs, Driver License Numbers, Passport Numbers, financial account information, credit card information protected by PCI DSS, and data protected under regulations like HIPAA, GLBA, CJIS are strictly prohibited.

UNC Data Classification Tiers

✅ Tier 1 – Public Data (Safe for Amplify)

- Press releases and published research

- Website content and marketing materials

- Publicly available reports and documents

- Any information approved for public distribution

✅ Tier 2 – Internal Data (Safe for Amplify)

- Internal memos and communications

- Non-public drafts and planning documents

- Training materials and procedural guides

- Non-sensitive project documentation

❌ Tier 3 – Confidential Data (NOT Allowed in Amplify)

- Social Security Numbers (SSNs)

- FERPA-protected student records

- Protected Health Information (PHI) or medical records

- Financial account information or banking data

- System credentials and passwords

- Any legally regulated or personally identifiable information (PII)

2. Important Guidelines

- When in doubt, assume higher sensitivity and avoid entering the data

- Use de-identified data where appropriate

- If you’re unsure about a data type, consult your department’s Data Steward or the UNC System Office data governance team before proceeding

- Amplify does not train on your data – conversations remain private within UNC’s secure environment

3. What if I accidentally enter Tier 3 data?

- Immediately stop using that conversation

- Delete the conversation from your Amplify history

- Report the incident to your department lead or IT security contact

- Do not continue using that chat session

For complete guidance, refer to the UNC Data Classification Policy or contact ai@northcarolina.edu.

4. Supported File Types

Currently Amplify works with most text-based files. While image and video files are not currently supported, the following file types are supported:

- Comma-Separated Values (.csv)

- Compressed file (.zip)

- Excel spreadsheets (.xlsx)

- Hypertext Markup Language – HTML (.html)

- JavaScript (.js)

- JSON format (.json)

- Markdown (.md)

- Plain text (.txt)

- Portable Document Format (.pdf)

- PowerPoint Presentation (.pptx)

- Python format (.py)

- Word documents (.docx)

*If your file fails to upload or be recognized, you may try saving it in a different format (e.g., converting a complex PDF to a plain text file or a different PDF version).

Quick Navigation

1. AI Model Limitations

Understanding these limitations helps you use Amplify effectively and set appropriate expectations for AI outputs.

No Conversational Memory Between Chats

Amplify AI models do not “remember” information from one chat conversation to another. Each new chat begins with a fresh memory slate.

What This Means:

- If you tell the AI your name in one chat and open a new chat (even with the same model), you’ll need to re-introduce yourself

- Context from previous conversations is not carried over

- The AI cannot reference work done in other chat sessions

- Each conversation is completely independent

Workaround:

- Continue working in the same chat thread for related tasks

- Use Custom Instructions to set persistent context at the start of each new chat

- Create Assistants with your frequently-used context and documents pre-loaded

- Copy and paste relevant context from previous chats when starting a new one

Hallucinations

AI models may confidently produce incorrect or fabricated information—a phenomenon known as “hallucination.”

Warning Signs:

- Overly specific details without sources

- Statistics that seem too precise or convenient

- Citations to documents or studies you can’t verify

- Contradictions within the same response

Best Practices:

- Always verify important facts, statistics, dates, and claims

- Cross-reference outputs with authoritative sources

- Use AI for drafting and brainstorming, not as a final authority

- Compare outputs from different models (GPT-4 vs. Claude 3)

Knowledge Cutoff

Models have training data only up to certain dates and may not know recent events or changes.

What This Means:

- GPT-4: Knowledge cutoff varies by version

- Claude 3: Knowledge cutoff varies by version

- Cannot provide information about events after training date

- May have outdated information on rapidly changing topics

Workaround:

- Use models with web search capabilities (GPT-4o, GPT-4-mini) for current information

- Upload recent documents to provide the AI with current context

- Verify time-sensitive information independently

Context Window Limits

There’s a limit to how much text the AI can process at once. This varies by model:

- GPT-4: Typically 8,000-32,000 tokens (varies by version)

- Claude 3: Can handle up to 200,000 tokens (very large documents)

- GPT-3.5: Typically 4,000-16,000 tokens

What This Means:

- Very long documents may need to be processed in sections

- Long conversation histories may cause earlier messages to be “forgotten”

- Extremely large files may exceed processing limits

Workaround:

- Use Claude 3 for very long documents (it has the largest context window)

- Break large documents into logical sections

- Start a new chat if conversations become very long

- Summarize long conversation threads and start fresh with the summary

2. Platform Limitations

No Web Search for Most Models

At this time, most Amplify models cannot search the web or access specific websites you reference.

What This Means:

- Models can only use information from their training data and files you upload

- Cannot fetch current information from websites

- Cannot verify facts against live web sources

- Cannot access paywalled or login-protected content

Models WITH Web Search:

- GPT-4o – Has live web searching capabilities

- GPT-4-mini – Has live web searching capabilities

Models WITHOUT Web Search:

- All AWS Bedrock models (Claude, other non-OpenAI models)

- Standard GPT-4 (without “o” designation)

Workaround:

- Use GPT-4o or GPT-4-mini when you need current web information

- Manually search for information and upload relevant documents

- Copy and paste web content into your chat (ensure Tier 1 or Tier 2 data only)

- Provide URLs for reference, but be aware the AI cannot access them directly (except GPT-4o/mini)

No Prompt Chaining Automation

Amplify does not automatically chain prompts together. You must manually manage multi-step workflows.

What is Prompt Chaining?

Prompt chaining is breaking down a complex task into a series of smaller, more manageable prompts that work together in sequence. Instead of asking an AI to do everything at once, you guide it through a step-by-step process where the output of one prompt becomes the input for the next.

Example of Prompt Chaining:

- Prompt 1: “Analyze this sales data and identify the top 3 trends”

- Prompt 2: “Based on these trends, draft recommendations for Q2 strategy”

- Prompt 3: “Format these recommendations as an executive summary”

Why Manual Chaining Works Better:

- You review output at each step before proceeding

- You can adjust the direction based on intermediate results

- Better quality control throughout the process

- Easier to identify where issues occur

Best Practice:

Break complex tasks into discrete steps and work through them sequentially in the same chat. This gives you control over each stage while maintaining conversation context.

Mobile Experience Limitations

- Optimized for desktop/laptop use

- Mobile access is limited in features and functionality

- File uploads may not work on all mobile browsers

- Template and Assistant creation difficult on mobile

Recommendation:

Use desktop or laptop with modern browsers (Chrome, Edge, Firefox) for substantive work. Reserve mobile for quick reference or simple queries.

Browser Compatibility

- Works best with modern browsers (Chrome, Edge, Firefox)

- Older browsers may have issues

- Some features may not function properly on unsupported browsers

3. Content Limitations

No Image Generation

As of right now, Amplify does not have the capability to create or generate images.

What You Cannot Do:

- Generate AI images from text descriptions

- Create charts, graphs, or visualizations directly

- Design logos or graphics

- Edit or modify existing images

What You CAN Do:

- Get the AI to write detailed descriptions for images you want to create elsewhere

- Generate data tables that you can use to create charts in Excel

- Create structured data outputs for visualization in other tools

No Video or Audio Analysis

Amplify cannot currently upload or analyze video and audio files.

What This Means:

- Cannot transcribe audio recordings

- Cannot analyze video content

- Cannot extract information from multimedia presentations

- Cannot process podcast or meeting recordings

Workaround:

- Use external transcription services, then upload the text transcript

- Manually transcribe key portions you need analyzed

- Extract audio/video content to text format before using Amplify

Not a Replacement for Human Judgment

- AI is a drafting assistant; all outputs require human review and verification

- May reflect biases present in training data

- Should not be relied upon for professional legal or medical advice

- May lack deep expertise in highly specialized fields

File Size and Format Limits

- Large files (typically over several MB) may exceed processing limits

- Scanned PDFs may require OCR and might not be perfectly readable

- Highly formatted documents may lose some formatting in processing

- Protected or encrypted files cannot be processed

4. Workarounds and Best Practices

General Best Practices to Mitigate Limitations:

- Cross-reference outputs: Always verify important information with authoritative sources

- Use for drafting: Treat AI as a first-draft tool, not a final authority

- Compare models: Test prompts with different models (GPT-4 vs. Claude 3) for comparison

- Start small: Begin with low-stakes tasks to build confidence and understanding

- Provide context: Upload relevant documents to ground AI responses in accurate information

- Break down tasks: Use prompt chaining for complex, multi-step projects

- Report issues: Let us know about unexpected behavior at ai@northcarolina.edu

When to Use Specific Models:

- Need current web information? Use GPT-4o or GPT-4-mini

- Processing long documents? Use Claude 3 (largest context window)

- Need structured, technical output? Use GPT-4

- Quick, simple tasks? Use GPT-3.5 (fastest, most cost-effective)

Usage Limitations Reminders:

- Tier 3 Data Prohibited: Never process regulated or highly sensitive information

- Performance Reviews: Should not be used to draft employee evaluations without supervisor approval

- Disclosure Required: AI assistance should be disclosed where appropriate in official documents

Understanding these limitations helps you use Amplify more effectively and set appropriate expectations. Most limitations have workable solutions or alternative approaches.

Quick Navigation

- Appropriate Uses

- Required Human Oversight

- Best Practices

- Disclosure Guidelines

- Documents Requiring Extra Caution

1. Appropriate Uses

Yes, Amplify is an excellent drafting assistant for official documents, but it’s essential to understand your responsibility in the process.

What Amplify Can Help With:

- Drafting initial versions of reports, memos, and policies

- Creating outlines and document structures

- Rewriting content for clarity or different audiences

- Generating multiple versions for comparison

- Formatting and organizing existing content

- Expanding bullet points into full paragraphs

- Creating executive summaries from longer documents

2. Required Human Oversight

You are responsible for all content you submit – AI is a tool, not an author.

Your Responsibilities:

- All AI-generated content must be thoroughly reviewed and verified

- Check facts, statistics, dates, and citations for accuracy

- Ensure tone and style match UNC System Office standards

- Verify compliance with relevant policies and regulations

- Confirm that all information is current and correct

- Remove any hallucinations or incorrect information

- Ensure the document serves its intended purpose

Why Human Review is Critical:

- Accuracy: AI can confidently produce incorrect information

- Context: AI may not understand organizational nuances or history

- Compliance: AI doesn’t know all institutional policies and requirements

- Accountability: You, not the AI, are accountable for the final document

- Quality: Human judgment ensures the document meets professional standards

3. Best Practices

The Drafting Process:

Step 1: Use AI for First Drafts

- Provide clear context and requirements in your prompt

- Upload relevant reference documents

- Specify the audience and purpose

- Request a specific format or structure

Step 2: Review Outputs Carefully

- Read the entire document thoroughly

- Verify all factual claims and data

- Check for logical flow and coherence

- Ensure the tone is appropriate

Step 3: Edit and Refine

- Rewrite sections to match your voice and intent

- Add missing context or institutional knowledge

- Remove any generic or placeholder content

- Strengthen weak arguments or explanations

Step 4: Verify with Authoritative Sources

- Cross-reference statistics with official reports

- Confirm policy statements with actual policy documents

- Verify dates and timelines

- Check citations and references

Step 5: Get Expert Review

- Have a subject-matter expert review technical or sensitive content

- Get supervisor approval before finalizing

- Share drafts with relevant stakeholders for feedback

- Consider legal or compliance review where appropriate

Quality Control Checklist:

- ☐ All facts and statistics verified

- ☐ Tone and style appropriate for audience

- ☐ Complies with UNC System Office standards

- ☐ No hallucinated or fabricated information

- ☐ Citations and sources are accurate

- ☐ Document structure is logical and clear

- ☐ Grammar and spelling are correct

- ☐ Relevant policies and regulations addressed

- ☐ Appropriate approvals obtained

4. Disclosure Guidelines

When appropriate, disclose AI assistance in official documents to maintain transparency.

When to Disclose:

- Official reports distributed beyond your immediate team

- External communications to stakeholders

- Published materials or public-facing documents

- When required by your department’s policies

- When the document’s authenticity might be questioned

When Disclosure May Not Be Necessary:

- Internal drafts and working documents

- Routine emails or memos

- When AI was only used for grammar checking or formatting

- Documents that undergo significant human revision

Example Disclaimers:

Standard Disclosure:

“This content was drafted with the assistance of generative AI and reviewed by a UNC staff member.”

Detailed Disclosure:

“Portions of this document were generated using Amplify AI to assist with drafting. All content has been reviewed, verified, and edited by [Name/Department] to ensure accuracy and compliance with UNC System Office standards.”

Minimal Disclosure:

“AI tools were used to assist in the preparation of this document.”

Best Practice:

Consult your supervisor about disclosure requirements for your specific department and document type. Different departments may have different standards.

5. Documents Requiring Extra Caution

Some document types require additional scrutiny and may not be appropriate for AI assistance in all cases.

High-Risk Documents:

Legal Documents or Contracts

- Must have legal review

- High risk of incorrect legal language

- Binding obligations require precision

- AI may not understand legal implications

- Recommendation: Use AI only for initial outlining; have legal counsel draft actual language

Personnel Evaluations or Performance Reviews

- Should not be used without supervisor approval

- Requires personal knowledge and judgment

- Can have significant career implications

- Must be based on observed performance, not AI generation

- Recommendation: Write these yourself based on actual observations

Official Communications to External Stakeholders

- Represents the university officially

- May have political or reputational implications

- Requires careful tone and messaging

- Recommendation: Use AI for drafting only; extensive human review required

Compliance-Related Documentation

- Subject to regulatory requirements

- Errors can have legal consequences

- Must cite specific regulations accurately

- Recommendation: Compliance officer must review all AI-generated content

Financial Reports or Budget Materials

- Requires accurate calculations and data

- Subject to audit and oversight

- Errors can have financial implications

- Recommendation: Verify all numbers independently; use AI only for narrative portions

Red Flags – When NOT to Use AI:

- When you don’t have the expertise to verify the output

- For time-sensitive documents without time for thorough review

- When personal experience or observation is required

- For documents with strict formatting or citation requirements you can’t verify

- When the stakes are too high for any errors

Remember: Amplify enhances your productivity but does not replace your expertise, judgment, or accountability. You are always the author of record for any document you submit.

Quick Navigation

- Supported File Types

- How to Upload Files

- Common Use Cases

- Effective File Upload Prompts

- Tips and Limitations

1. Supported File Types

Yes! File upload is one of Amplify’s most powerful features, enabling you to quickly analyze, summarize, and extract information from documents.

Document Files:

- PDFs (.pdf): Research papers, reports, scanned documents

- Word Documents (.docx): Drafts, policies, memos

- PowerPoint (.pptx): Presentations and slide decks

- Text Files (.txt): Plain text documents, notes

- Markdown (.md): Documentation and formatted text

- HTML (.html): Web pages and formatted content

Data Files:

- Excel Spreadsheets (.xlsx): Data tables, budgets, analysis

- CSV Files (.csv): Data exports, lists, databases

- JSON (.json): Structured data files

Code Files:

- Python (.py): Python scripts and programs

- JavaScript (.js): JavaScript code

- Other code formats: Various programming languages

Compressed Files:

- ZIP (.zip): Compressed archives containing multiple files

2. How to Upload Files

Step-by-Step Process:

Step 1: Start or Continue a Conversation

Open a new chat or use an existing conversation thread in Amplify.

Step 2: Click the File Upload Icon

Look for the upload button or paperclip icon in the chat input area at the bottom of your screen.

Step 3: Select Your File

Choose the file from your computer. Larger files may take a moment to upload—you’ll see a progress indicator.

Step 4: Wait for Upload Completion

The file will appear in your chat once uploaded. You may see a file icon or preview depending on the file type.

Step 5: Enter Your Prompt

Describe what you want the AI to do with the file. Be specific about your goals and desired output format.

Important Reminders:

- Ensure files contain only Tier 1 or Tier 2 data

- Never upload files with Tier 3 confidential information (SSNs, medical records, financial account data, etc.)

- Review the file content before uploading to ensure compliance

3. Common Use Cases

Summarization:

Basic Summary

“Summarize this 50-page report in 3 key points”

Executive Summary

“Create an executive summary of this document in 2 paragraphs, focusing on recommendations”

Bullet Point Summary

“Summarize the main findings from this research paper as a bulleted list”

Data Analysis:

Trend Identification

“What trends do you see in this sales spreadsheet?”

Statistical Analysis

“Analyze this data and identify any outliers or unusual patterns”

Data Interpretation

“Explain what these quarterly results mean in plain language”

Information Extraction:

Action Items

“Pull all action items from this meeting notes document and list them with responsible parties”

Key Dates

“Extract all important dates and deadlines from this project plan”

Contact Information

“Create a list of all names, titles, and contact information mentioned in this document”

Document Comparison:

Policy Comparison

“Compare these two policy drafts and highlight the key differences”

Version Analysis

“What changed between version 1 and version 2 of this document?”

Formatting and Transformation:

Data Restructuring

“Convert this data into a formatted table”

Format Conversion

“Take this spreadsheet data and create a narrative report”

Translation and Simplification:

Plain Language

“Explain this technical document in plain language for non-experts”

Audience Adaptation

“Rewrite this report for an executive audience, focusing on strategic implications”

4. Effective File Upload Prompts

The Anatomy of a Good Prompt:

Vague Prompt (Not Recommended):

“Analyze this data”

❌ Too broad, doesn’t specify what kind of analysis or output format

Better Prompt:

“This is Q4 2023 sales data. Identify the top 3 performing products by revenue and explain any notable trends you observe.”

✓ Provides context, specifies what to look for, asks for explanation

Best Prompt:

“Analyze this Q4 2023 sales data (file attached). Create a summary that includes: 1) Top 3 products by revenue with percentage of total, 2) Month-over-month growth trends, 3) Any products with declining sales. Format as a brief memo suitable for executive review.”

✓✓ Context, specific deliverables, format specification, intended audience

Prompt Best Practices:

Be Specific About What You Want:

- “Create an executive summary” instead of just “Summarize”

- “List all recommendations” instead of “What does it say?”

- “Identify risks and opportunities” instead of “Analyze”

Specify the Format:

- “Provide bullet points”

- “Write 2-3 paragraphs”

- “Create a numbered list”

- “Format as a table”

- “Present as Q&A format”

Set the Context:

- “This is a budget proposal for IT infrastructure”

- “This meeting was about the Q2 planning process”

- “This policy applies to faculty hiring procedures”

- “This data covers the 2023 fiscal year”

Request Specific Sections or Focus:

- “Focus on the financial recommendations”

- “Pay special attention to the implementation timeline”

- “Emphasize the risks and mitigation strategies”

- “Highlight any budget implications”

Specify Your Audience:

- “For executive leadership”

- “For technical staff”

- “For external stakeholders”

- “For general university community”

5. Tips and Limitations

File Upload Tips:

- Multiple files: You can upload multiple files in a single conversation for comparison or combined analysis

- File naming: Use clear, descriptive file names so you and the AI can reference them easily

- Context documents: Upload reference materials (policies, templates) that can ground the AI’s responses

- Iterative refinement: Start with a basic prompt, then ask follow-up questions to dig deeper

- Save your prompts: If you find an effective prompt for a file type, save it as a Prompt Template for reuse

What Works Best:

- Text-based files: PDFs, Word docs, and spreadsheets with clear structure

- Clean data: Well-formatted spreadsheets with labeled columns

- Shorter files: Files under 50 pages typically process more reliably

- Native formats: Original file formats (not scanned images of documents)

Limitations to Be Aware Of:

File Size Limits:

- Large files (typically over several MB) may exceed processing limits

- Very long documents may need to be processed in sections

- Use Claude 3 for longer documents (largest context window)

Scanned PDFs:

- May require OCR (Optical Character Recognition)

- Text recognition might not be 100% accurate

- Images of text are harder to process than native digital text

- Workaround: Use OCR software first, then upload the text version

Highly Formatted Documents:

- Complex formatting may be lost or misinterpreted

- Tables and charts might not render perfectly

- Multi-column layouts can cause reading order issues

- Workaround: Simplify formatting before upload or provide context about the structure

Protected or Encrypted Files:

- Password-protected files cannot be processed

- DRM-protected documents cannot be opened

- Workaround: Remove protection before uploading (if you have permission)

Image and Multimedia Content:

- Images within documents may not be analyzed

- Charts and graphs might not be fully interpreted

- Videos and audio files are not currently supported

- Workaround: Describe visual content or extract data from charts manually

Troubleshooting Upload Issues:

If Your File Fails to Upload:

- Try saving it in a different format (e.g., convert complex PDF to plain text or simpler PDF)

- Reduce the file size by compressing or splitting into smaller sections

- Remove any password protection

- Check that the file isn’t corrupted by opening it on your computer first

- Try a different browser (Chrome, Edge, or Firefox work best)

If Results Are Unsatisfactory:

- Refine your prompt to be more specific

- Try a different model (Claude 3 for long documents, GPT-4 for structured analysis)

- Break the task into smaller steps using prompt chaining

- Upload a cleaner version of the file

- Provide more context about what you’re looking for

Pro Tips:

- Name your files clearly: “Q4_Sales_Report_2023.xlsx” is better than “data.xlsx”

- Clean your data first: Remove unnecessary rows, fix formatting issues

- Test with smaller sections: If processing a long document, test with a few pages first

- Use the right model: Claude 3 for long documents, GPT-4 for complex analysis

- Keep a prompt library: Save successful prompts for different file types

File upload capabilities make Amplify incredibly versatile for document analysis, data interpretation, and content transformation. Experiment with different file types and prompts to discover what works best for your workflow.

Quick Navigation

1. Core Chat Features

Amplify provides a comprehensive suite of AI-powered tools designed for secure, productive work within the UNC System Office environment.

Multi-Model Access

Choose from GPT-4, Claude 3, GPT-3.5, and other leading models in one interface. Each model has unique strengths—select based on your task complexity and needs.

File Upload Support

Analyze and process multiple file types including:

- PDFs, Word documents (.docx), PowerPoint (.pptx)

- Excel spreadsheets (.xlsx), CSV files

- Text files, code files, and more

Perfect for document summarization, data analysis, and content extraction.

Conversation Management

- Organize chats in folders by theme or project

- Rename conversations for easy identification

- Search past conversations by keyword

- Export chats as Word or PowerPoint files

2. Customization Tools

Assistants

Similar to custom GPTs, Assistants act as specialized helpers for your specific business needs. Think of them as AI experts trained on your documents and instructions.

What Assistants Can Do:

- Upload specific documents: Ground the AI in your departmental policies, procedures, or reference materials

- Point to web data sources: Connect to relevant online information

- Custom instructions: Define how the Assistant should respond and behave

- Embeddable paths: Create a subdomain that can be embedded in your websites for direct access

Example Use Cases:

- HR Assistant with uploaded benefits documents to answer employee FAQs

- Policy Assistant trained on UNC System Office procedures

- Data Analysis Assistant with department-specific reporting templates

Prompt Templates

Pre-written starting points for your conversations that save time and ensure consistency.

Why Use Prompt Templates:

- Save time on repetitive tasks

- Provide the AI with necessary context automatically

- Use variables like {{Topic}}, {{Audience}}, or {{file:document}} for flexibility

- Share successful templates with colleagues

Perfect for: Policy briefs, email responses, meeting summaries, report generation

Custom Instructions

Similar to “Gems” in Google Gemini, Custom Instructions provide overarching guidelines that the AI follows across an entire chat session.

How Custom Instructions Work:

- Set at the beginning of a conversation

- Apply to all responses in that chat

- Can be edited per session

- Make responses more consistent with your preferences

Examples:

- “Always respond in bullet points”

- “Act as a compliance advisor for higher education”

- “Use UNC System Office terminology and style”

- “Prioritize accuracy over creativity”

API Access

Create personal or resource account API keys to programmatically access Amplify assets.

API Use Cases:

- Integrate Amplify with existing chatbot interfaces

- Send and receive prompt/response data programmatically

- Build custom applications that leverage Amplify’s AI capabilities

- Automate workflows and data processing

3. Collaboration Features

Sharing

Share conversations, templates, instructions, and folders with other UNC Amplify users securely.

- Select recipients by UNC email address

- Add optional notes explaining the shared content

- Recipients can import and customize shared items

Import Shared Content

- Navigate to “Shared with You” in the left sidebar

- Review items shared by colleagues

- Import into your workspace and adapt to your needs

Export Options

- Export individual responses or entire chats

- Download as Word (.docx) for reports

- Export as PowerPoint (.pptx) for presentations

- Backup conversations as JSON for device transfer

4. Temperature Settings

Temperature controls how precise or creative the AI’s responses will be. Think of it as a dial between “stick to the facts” and “think outside the box.”

What is Temperature?

Temperature is a value between 0 and 1 that affects how predictable or diverse the AI’s output will be.

Low Temperature (Closer to 0 – e.g., 0.2)

Characteristics:

- More deterministic and consistent responses

- Selects the most probable words and phrases

- Focused, precise, and less varied output

- Minimal creativity or randomness

Best for:

- Technical explanations and documentation

- Factual content and data analysis

- Specific instructions or procedures

- Code generation and debugging

- Tasks requiring accuracy and consistency

High Temperature (Closer to 1 – e.g., 0.8)

Characteristics:

- More stochastic (random) responses

- Considers less probable words and phrases

- Diverse, creative, and less predictable output

- Greater variety in responses

Best for:

- Creative writing and brainstorming

- Generating multiple perspectives

- Marketing copy and engaging content

- Exploring different approaches to problems

- Tasks where variety and novelty are valued

When Should I Use Different Temperatures?

Setting the temperature depends primarily on your goal for the conversation:

Use Low Temperature (0.2-0.4) when you need:

- Precise, accurate information

- Consistent formatting across multiple outputs

- Technical or factual content

- Specific instructions or step-by-step procedures

Use High Temperature (0.7-0.9) when you want:

- Creative writing or storytelling

- Brainstorming and idea generation

- Varied responses to explore different angles

- More conversational or engaging tone

Pro Tip: Temperature is a tool for experimentation. Try different settings to see how they affect outputs for your specific use cases.

5. Response Length

Control how verbose the AI’s responses will be. Higher values allow, but do not guarantee, longer answers.

Response Length Options:

Concise

- Short, direct responses

- Minimum token usage

- Best for quick answers or simple tasks

- Most cost-effective option

Average (Default)

- Balanced response length

- Suitable for most tasks

- Good mix of detail and efficiency

- Recommended starting point

Verbose

- Detailed, comprehensive responses

- More explanation and context

- Best for complex topics requiring depth

- Uses more output tokens (higher cost)

Best Practice:

If you don’t need highly verbose answers, we recommend leaving the setting at “Average” or adjusting to “Concise” to save on output tokens and reduce costs. You can always ask follow-up questions for more detail if needed.

Additional Resources

For comprehensive feature documentation and step-by-step guides, please refer to the Amplify User Guide.

1. Introductory VideoWelcome to Amplify! This video introduces you to our secure, internal platform designed to help you explore advanced AI tools safely. |

2. Getting Started & Model SelectionLearn how to navigate the interface and select the right AI model for your specific task. |

3. Choosing LLM ModelsExplore the differences between models like GPT-4 and Claude to maximize your productivity. |

4. Appropriate Use of Data Tiers

SAFETY ALERT:

Never input Tier 3 data (SSNs, medical records). Only use Tier 1 and Tier 2 data. |

5. Prompt Writing 101Master the art of prompt engineering to get the most accurate and useful responses. |

6. Using Work DocumentsDiscover how to securely upload and analyze documents to summarize complex information instantly. |

7. Creating AI AssistantsLearn how to build custom assistants that are pre-grounded with your specific data and instructions. |

8. Advanced Assistant TechniquesRefine your assistants for complex departmental workflows and collaborative tasks. |

9. Sharing & CollaborationSecurely share chats and templates with colleagues to boost team efficiency. |

10. Responsible Use & Best PracticesReview the final ethics and verification standards to ensure high-quality AI results. |

Amplify Resource LibraryDownload essential PDF guides and documentation for the Amplify AI platform. |

|

|

||

|

|

||

|

|